You can see it in the post thumbnail

/s

You can see it in the post thumbnail

/s

dead link

This video is not available on NorthTube.

original https://tilvids.com/w/6c411a6e-511a-4912-a9c8-a812afdd0d30

I had to wait 5-6 seconds to visit that site.

The internet got so much worse - in two ways of course.

Who is Kate?

Kate the editor? Or is there an IDE called Kate?

The collaborative sharing nature of these platforms is a big advantage. (Not just VS Code Marketplace. We have this with all extension and lib and program package managers.)

Current approaches revolve around

The problem with the latter is that it is often not necessarily proof of trustworthyness, only that the namespace is owned by the same entity in its entirety.

In my opinion, improvements could be made through

Maybe there could be some more coordinated efforts of review and approval. Like, if the publisher has a trustworthiness indication, and the package has labeled advocators with their own trustworthiness indicated, you could make a better immediate assessment.

On the more technical side, before the platform, a more restrictive and specific permission system. Like browser extensions ask for permissions on install and/or for specific functionality could be implemented for app extensions and lib packages too. Platform requirements could require minimal defaults and optional things being implemented as optional rather than “ask for everything by default”.

Minification is a form of obfuscation. It makes it (much) less readable.

Of course you could run a formatter over it. But that’s already an additional step you have to do. By the same reasoning you could run a deobfuscator over more obfuscated code.

a ~400 pages manual

🤌 🤘

What makes you think only GitHub is celebrating?

Let’s call it OG for Open source Games.

deleted by creator

let us resurrect the ancient art of Bittorrent

haha

Very interesting. A trove of experience and practical knowledge.

They were able to anticipate most of the loss scenarios in advance because they saw that logical arguments were not prevailing; when that happens, ““there’s only one explanation and that’s an alternative motive””. His ““number one recommendation”” is to ensure, even before the project gets started, that it has the right champion and backing inside the agency or organization; that is the real determiner for whether a project will succeed or fail.

Not very surprising, but still tragic and sad.

I love Nushell in Windows Terminal with Starship as an evolution and a leap of shell. Structured data, native format transformations, strong querying capabilities, expressive state information.

I was surprised that the linked article went an entirely different direction. It seems mainly driven by mouse interactions, but I think it has interesting suggestions or ideas even if you disregard mouse control or make it optional.

I don’t know. Can you?

I’m usually not using bash locally, and remotely don’t change the prompt, but Starship works in bash too.

I use Nushell with Starship (cross platform prompt) in Windows Terminal.

~

nu ❯ took 52ms

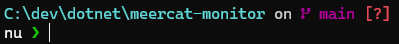

Path above prompt, prompt with shell name and a character, and on the right side how long the previous command took. The Character changes color from green to red when the last command exited with a non-0/-success exit code.

In a git repo folder it shows git info too - the branch symbol won’t show here because here is not a nerd font with symbols; I’ll add a screenshot:

C:\dev\dotnet\meercat-monitor on main [?]

nu ❯ took 1ms

Starship can show a bunch of status/state information for various tools, package managers, docker, etc.

I wouldn’t show my PROMPT_COMMAND, but it’s a nu closure so not really comparable to bash. But as I said, Starship works with Bash too.

and include expensive endpoints like git blame, every page of every git log, and every commit in your repository. They do so using random User-Agents from tens of thousands of IP addresses, each one making no more than one HTTP request, trying to blend in with user traffic.

That’s insane. They also mention crawling happening every 6 hours instead of only once. And the vast majority of traffic coming from a few AI companies.

It’s a shame. The US won’t regulate - and certainly not under the current administration. China is unlikely to.

So what can be done? Is this how the internet splits into authorized and not? Or into largely blocked areas? Maybe responses could include errors that humans could identify and ignore but LLMS would not to poison them?

When you think about the economic and environmental cost of this it’s insane. I knew AI is expensive to train and run. But now I have to consider where they leech from for training and live queries too.

That’s interesting and cool.

Let’s encrypt is an exceptional success story.